Webinars & Virtual Events

ObservePoint + NP Digital: How Digital Marketers Should Prepare for 3rd-Party Cookie Deprecation

The deprecation of 3rd-party cookies on Chrome is a massive change for digital marketers. This session covers what marketers can expect and how to keep up with these big changes.

IAPP Webinar: Shining a Light on Dark Patterns

Do you feel prepared for the death of cookies? We wanted to know how well prepared the industry is, so we scanned the top 100 sites in the US, UK and Australian markets to find out.

Counting Cookies: The Reliance, the Risk, and the Remedy

Do you feel prepared for the death of cookies? We wanted to know how well prepared the industry is, so we scanned the top 100 sites in the US, UK and Australian markets to find out.

ObservePoint Privacy Compliance – Marketing Analytics Summit 2021

Join Mike Fong, Solutions Engineer, to learn how to protect your customers, their data, and your reputation by ensuring privacy compliance.

The Future of Marketing in a Post-Cookie World – Alex Langshur, Cardinal Path

Learn how to thrive in the digital marketing and analytics world despite the impending death of cookies.

Solving GDPR’s Data Subject Requests Big Data Problem – Ed Devine, Fandango & Glen Horsley, RIVN

Join the discussion on the creation and rollout of a privacy program that resulted in Four Recommendations for DSR compliance.

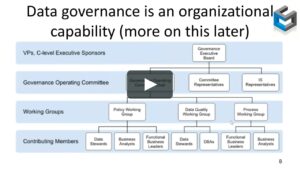

Data Governance Provides a 432% ROI – Dean Davison, Forrester

See how Forrester's TEI™ of ObservePoint found data governance provides 432% ROI by helping to optimize customer experiences, increase revenue, improve productivity, and avoid wasted ad spend.

A Better Digital World (Introduction)

2020 has accelerated the Digital Revolution, which is being led by every marketer, analyst, and insights professional who is using data to create engaging experiences and a better digital world.

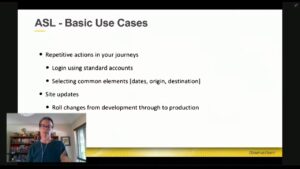

Data Governance Tips & Tricks – Jordan Avalos & Pam Frei, Southwest Airlines

Utilize lesser-known features of ObservePoint for data governance: LiveConnect, Action Set Library, Webhook integrations, Google Sheets Ad-ons, and more!

Lea Pica, LeaPica.com – 3 Keys to Avoiding Presentation Zombification and Creating an Impact with Your Insights

Learn from Lea Pica, Data Storytelling Advocate, about how to help others remember your data and act upon it.

Jon Tomlinson, Metric Partners – Maximize Your Marketing Channels for Adobe Analytics

Learn how to use sub-channels to improve data quality while maximizing Adobe’s marketing channel reporting.

Peter O’Neill, Ayima – Identifying and Resolving Transaction Discrepancies

Peter O'Neill, Director of Analytics at Ayima, will discuss how to establish trust in the data captured in key conversion paths.

Chris O’Neill, ObservePoint – Perfecting Release Validation: Catch Errors Before they Destroy Your Data

Learn how to use release validation to identify analytics errors in a staging environment before going live.

3 Ways to Optimize Your Website with Test Automation: Data Quality, SEO, & Page Performance

Learn from Ali Shoukat, Marketing Technologist at Cisco, and Chris O'Neill, Solutions Engineer at ObservePoint, how to use test automation to improve various aspects of site performance.

Webinar – Migrating from Adobe DTM to Launch: A 4-Phased Approach

Learn how to migrate your legacy Adobe Dynamic Tag Management implementation over to Adobe's new TMS, Launch by Adobe.

Lori McNeill, Riptide Analytics – No Dev Resources? No Problem! 6 Technical Hacks for Analytics Practitioners

Lori will be walking through some "hacks" for analysts to increase self-sufficiency in getting the data they need.

Judah Phillips, SmartCurrent – Data Stewardship in Digital Analytics

Learn how to appoint a data steward and create more value with analytics.

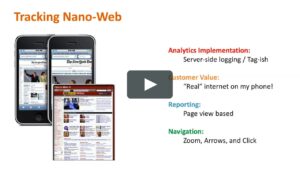

Lee Isensee, Search Discovery – Hey Siri, What Are App Analytics?

This session will cover the evolving market of mobile and app analytics, and how to use that data to succeed.

Matt Langie, MarketLinc – The Power of Human Engagement in a Digital World

Learn a unique approach to analyzing visitor digital body language, segmenting that site traffic, and delivering engagement at a human level

Michele Kiss, Analytics Demystified – Pairing Analytics with Qualitative Methods to Understand the WHY

Learn quantitative and qualitative techniques companies can leverage to get more insight into their customers’ behaviors.